Tips for writing nodejs scripts using only built-in modules and async

For the last few years, I've spent a huge majority of my time writing scripts in nodejs as my default scripting language. I've dabbled with bash a bit too, but it's still so weird, and hard to test, and I'm such a Typescript fan that I generally prefer to go the typescript route as often as possible.

Below is a list of tips to make writing nodejs scripts fun, fast, and easy.

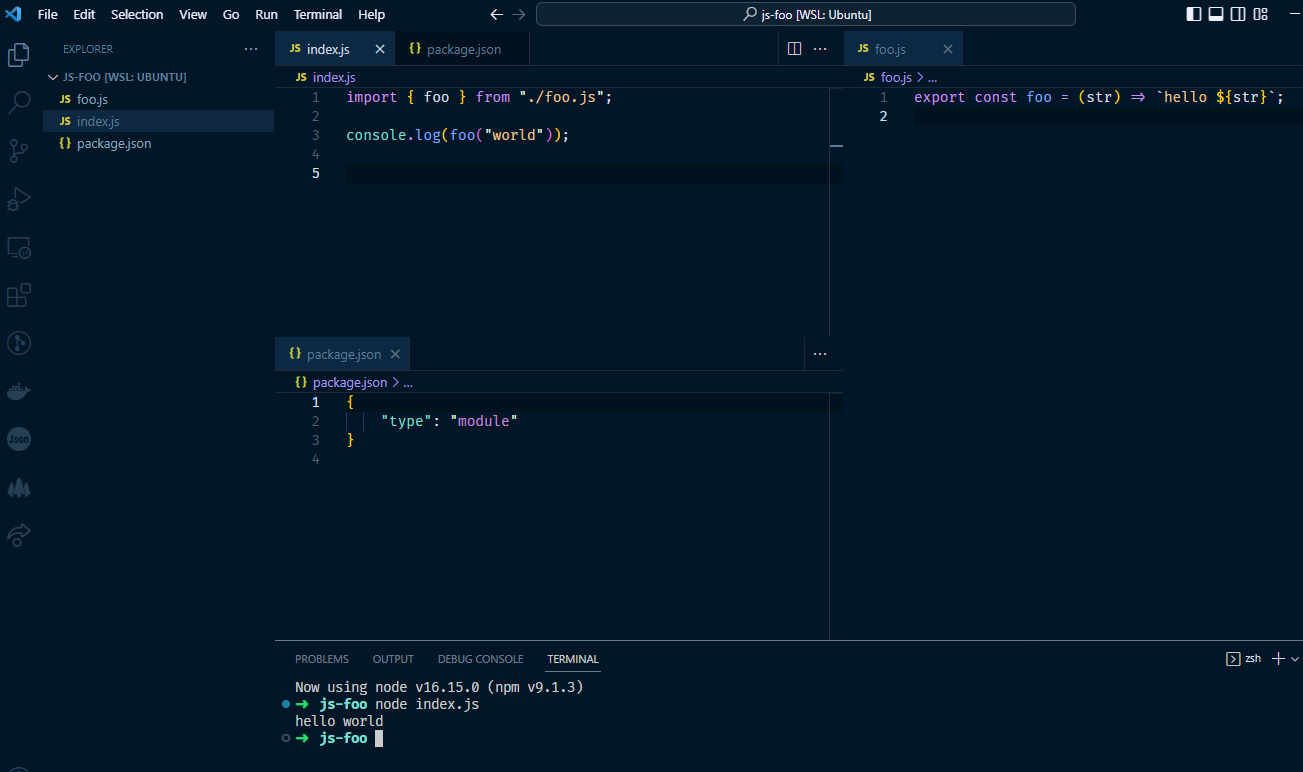

Use ES Modules

Yes! You can do it now! However, thar be dragons.

In general tho, if you want to use ES modules in node, you can...

- Name your file with

.mjson the end of it - Use a

.jsfile in a place where the closestpackage.jsonhas"type": "module" - Use

node --evaland pass--input-type=module

Similar rules apply to keeping a file use commonjs

- Name your file with

.cjs - Use a

.jsfile in a place where the closestpackage.jsonhas"type": "commonjs" - Use

node --evaland pass--input-type=commonjs

There are a couple of provisos, a, a couple of quid pro quos

When you switch to using ES Modules in node, you have to:

- Always include the extension in your imports...

import { foo } from "./bar.js";

- You have to use

import()instead ofrequire - This doesn't work...

__dirname

But, this does...

import path from 'node:path';

import { fileURLToPath } from 'node:url';

const __filename = fileURLToPath(import.meta.url);

const __dirname = path.dirname(__filename);

You might also run into issues with some of the node_modules that you import, so, just be careful. But, if you're generally sticking to native node modules and utilities anyways, then you should be pretty good to go.

Use top level await

This became available in 14.8. For the longest time, I'd always write a wrapper every time I got ready to write a node.js script like...

const main = async () => {

// await some stuff in here

};

main().catch((err) => {

console.error(err);

process.exit(1);

});I did that so often that I wrote VSCode snippets for it. Heh.

But, cool thing is, you can just await anywhere in a JS file now.

NOTE: you can only do this in a "module" context, i.e. see "Use ES Modules" above

import fs from 'node:fs/promises';

const pkg = await fs.readFile('./package.json');

console.log(JSON.parse(pkg).name);Use the new-ish promise based APIs along with the node prefix

As of node v14.18 and v16, you can now require native node modules, i.e. those things like path, fs, etc which are built into node, by using the node: protocol in the import or require statement. This will prevent script writers from accidentally importing 3rd party versions of utilities.

import fs from "node:fs/promises";

import stream from "node:stream/promises";

import dns from "node:dns/promises";

import timers from "node:timers/promises";

Also, for everything else that takes a standard node (err, results) => {} callback, you can always fall back on the promisify method.

import fs from 'node:fs/promises';

import { exec as _exec } from 'node:child_process';

import { promisify } from 'node:util';

const exec = promisify(_exec);

const out = await exec('git status');

console.log(out.stdout);

Use child_process effectively

The child_process module is one of the most useful modules in a script writer's backpack. You can run any arbitrary thing on the machine using its spawn or exec methods. However, knowing when, and how to use either of them, is essential.

The most fundamental difference between spawn and exec is...

spawn creates and returns a child process that you can interact with easily via streams

exec creates a runs a subprocess returning the output and error streams with a max of 200kb

Therefore, most of the time exec will do what you need, but if you need some sort of interactivity or have a bunch of output, then you can use spawn.

One of the previous examples above used exec to call git status. Another example could be an abstraction over some sort of unix method like find.

import fs from 'node:fs/promises';

import { exec as _exec } from 'node:child_process';

import { promisify } from 'node:util';

const exec = promisify(_exec);

const [,, filename, directory] = process.argv;

const out = await exec(`find ${directory} -type f -name ${filename} `);

console.log(out.stdout);

// run with...

// node ./script.mjs filename ./directoryHere's an example of using spawn to do something.

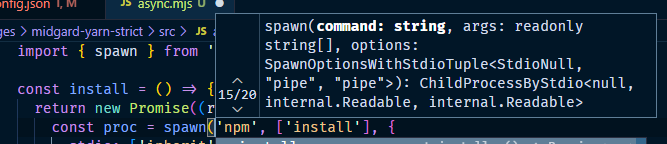

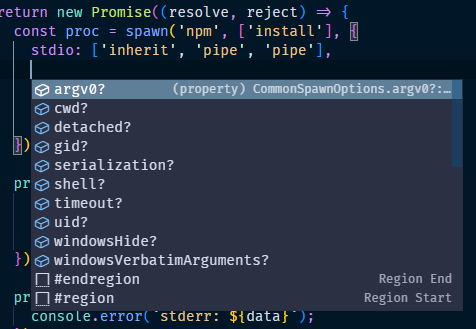

In this case, we're going to spawn an npm install in a different package in our repo.

import { spawn } from 'node:child_process';

const install = () => {

return new Promise((resolve, reject) => {

const proc = spawn('npm', ['install'], {

stdio: ['inherit', 'pipe', 'pipe'],

env: {

...process.env,

}

});

proc.stdout.on('data', (data) => {

if (data.includes('npm WARN')) {

// Do something

}

});

proc.stderr.on('data', (data) => {

console.error(`stderr: ${data}`);

});

proc.on('close', (code) => {

if (code !== 0) {

return reject(code);

}

resolve();

});

});

};

try {

await install();

} catch(e) {

console.error("Something went wrong with the install.");

process.exit(1);

}First thing to note here is that we're going create a function and use the Promise constructor in the return. That way we can await it at the top level.

Let's take a look at the options we're passing to spawn. (You can see the entire API of spawn here) The first is the top level command, in this case npm. The second option is an array of the arguments we want to pass to npm in this case just install. But, if we wanted pass multiple things to npm, we'd need to add them each as elements to that array such as ['install', '--save-dev', '--legacy-peer-deps'].

The third argument is the options object. By default, when you spawn a process, the stdio option is set to pipe.

The pipe option means that the proc which is returned from spawn will have proc.stdout, proc.stdin, and proc.stderr on it. These are streams so that you can use methods like on and one to respond to events such as data.

The stdin argument can either take pipe, inherit, or an array corresponding to which parts of the process you want to control...

If you don't need to use stdin or stderr for example, you can switch from pipe to inherit.

stdin: [

'inherit' /* stdin */,

'pipe' /* stdout */,

'inherit' /* stderr */

],Passing stdin like that 👆 would provide proc.stdout but not proc.stdin or proc.stderr.

Lastly, you can watch for the process you're spawning to close by using proc.on('close', () => {});.

One more nice option is that you can control which working directory the process is spawned in via the cwd option. This allows you to use either process.cwd(), or set some other path where you actually want to spawn the process.

Use Typescript for an even cooler script writing experience

Generally I prefer to use Typescript when writing just about anything these days, including scripts. There's a couple of different ways when writing scripts like this which you can use to leverage Typescript for the extra security blanket of checks in your code.

First of all, if you're going to be using Typescript and Node, make sure that you have the @types/node package installed as a "devDependency". That will ensure that all the native modules have correct type information.

That in and of itself is super useful.

What's really worth noting though is that, you don't HAVE to have your file end in a .ts extension. You can add a couple of options to a tsconfig.json. They are "allowJs" and "checkJs". Set them both to true, and you're good to go.

Typescript as of the 4.7 release now supports ES Modules too by reading the "type": "module" that can be added to package.json files. And you can also manually tell Typescript to use modules by setting the "module" option to node16 or nodenext. All that is documented here.

If you want to use .ts instead of using allowJs and checkJs, you'll probably also want to install something like @babel/register, or ts-node, maybe even esbuild-runner. I still personaly prefer the Babel route.

Conclusion

In the world of Frontend and DevOps aka DivOps, writing scripts is inevitable. Writing scripts in Javascript / Typescript allows you to use the JS skills you have as a Frontend dev to interact with the machine to do all kinds of automations, pipelines, etc. And bonus points because you can also write unit tests too. Go forth and write all your scripts in JavaScript!